The State of State Content Moderation Laws

UPDATE 4/13/2023: CCIA is planning to update the interactive state legislative map for content moderation at least weekly on Friday afternoons.

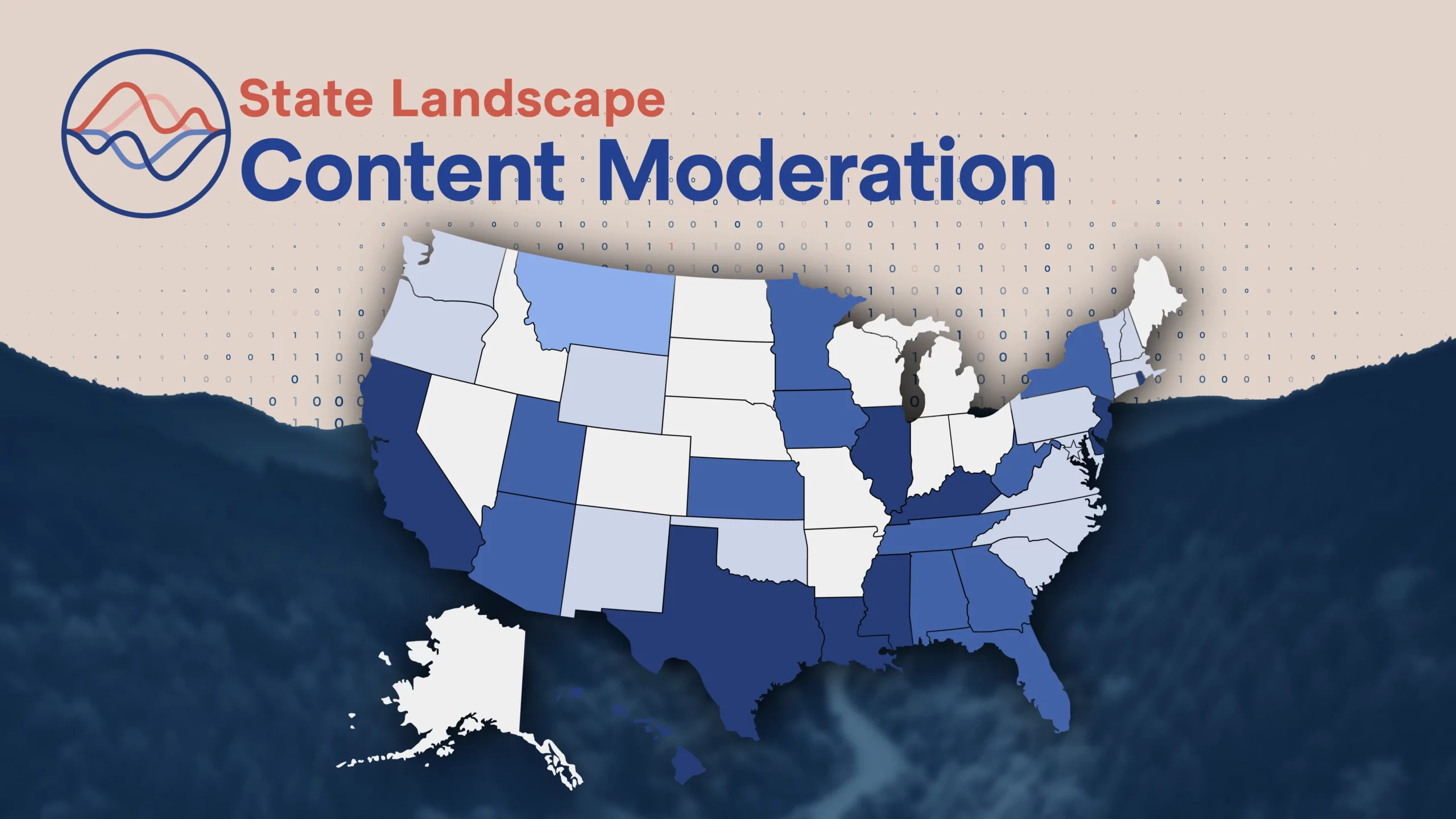

Amid ongoing debates at the federal level, state lawmakers began their own initiatives to regulate online content moderation around 2018. Since 2021, 38 states have introduced over 250 bills to regulate content across digital services’ platforms. States across the country — from California to South Carolina to New York — are considering or have enacted legislation. Many of these bills are unconstitutional, conflict with federal law (including Section 230), and would place major barriers on digital services’ abilities to restrict dangerous content on their platforms.

Types of Content Moderation Measures

Legislative proposals targeted at content moderation have taken several nuanced forms, each with their own set of impacts and potential unintended consequences. See below for the different types of content moderation measures that have been introduced this past session.

Anti-“Censorship”

States such as Florida and Texas have introduced measures to restrict certain content removal practices or the removal of specified content by online platforms. While most bill provisions apply broadly to all users, several apply specifically to content posted by, about, or on behalf of elected officials or candidates for public office. Many proposals specify steps a company must take in order to remove content, including notification and appeals processes.

Increased Content Removal

On the flip-side, other bills — like those proposed in California and New York — seek to impose additional requirements to remove content. These are primarily targeted at requiring online platforms to implement new or additional strategies to moderate dangerous, illegal, false, or otherwise harmful information online, and in many cases, carry heavy penalties if such content is not taken down in a timely manner.

Transparency Reporting and Disclosure Requirements

Other measures in states like California, Georgia, and Ohio would require platforms to submit regular reports detailing actions taken in response to violations of terms of service. This includes compelling digital services to release confidential information regarding internal practices and impose duplicative responsibilities on businesses with no tangible benefit to consumers.

Disclosure and Auditing or Testing Requirements for Algorithms

These bills, like in DC and Minnesota, would impose audit reporting requirements on businesses with little clarity about when and how they must conduct such audits while assuming these requirements can mitigate potential bias in algorithmically-informed decision-making technologies. However, if definitions and reporting requirements are not narrowly tailored, these auditing requirements risk limiting applying such technologies for beneficial uses, overly burdening companies (particularly smaller businesses) with lengthy reporting and compliance requirements.

Child Safety

These measures, including California AB 2273 or New York SB 9563, mirror policies proposed abroad, such as the UK Age Appropriate Design Code Act, as further detailed below. Under these laws, businesses that provide online services, products, or features likely to be accessed by children must comply with specified standards or outright ban children from accessing certain services.

Key States To Watch In The 2023 Legislative Cycle

Previous legislative activity on content moderation proposals can be a good indicator of future policy conversations. Below are key states that are likely to take up content moderation legislation in the 2023 session.

California

California has been particularly active in legislating on online content moderation and algorithms enacting several measures. These bills range from regulating devices and features on online platforms to holding companies responsible for underage users’ access to the services. Measures like AB 587 and AB 2273 carry heavy compliance requirements along with costly penalties for non-compliance. The 5Rights Foundation, a UK-based organization founded by Baroness Beeban Kidron, was a “co-source” or sponsor of AB 2273 and is likely to continue its efforts to push similar legislation across the states in the coming years.

New York

New York legislators introduced several content moderation bills this session, including two that the Governor signed. SB 9465 establishes a task force on social media and violent extremism, and AB 7865/SB 4511 requires social media networks to provide and maintain mechanisms for reporting hateful conduct on their platform(s). Opponents of the latter measures maintain that the language is vague and overly broad, and may unintentionally lead to a wholesale ban on categories of particularly risky content when users report third-party content, creating a collateral censorship problem.

Mirroring California’s AB 2273, the New York Senate introduced SB 9563, which imposes additional data privacy and content moderation requirements on entities offering an online product that is targeted to users under 18. These requirements include conducting data protection impact assessments, reporting about community standards for online published content, in addition to banning online advertising targeted to children. Though unlikely to pass before the end of the year, due to the traction this type of legislation is gaining in other legislatures, including California, it is likely that these provisions will resurface in 2023.

Minnesota

Minnesota lawmakers considered eight bills in 2022 concerning content moderation. While none were successful in passing both chambers, given the volume of proposed legislation, it is clear legislators are interested in these topics. Among these measures, SF 3933/HF 3724 sought to regulate algorithms that target user-generated content for account users under 18. With other children’s online safety and privacy legislation surfacing in other states like California and New York, these policy discussions are likely to be widespread in 2023.

Ohio

Ohio House Republicans introduced HB 441, aimed at prohibiting a social media platform from “censoring” a user, a user’s expression, or a user’s ability to receive the expression of another. The bill also declares that any social media platform that functionally has more than 50 million active users in the U.S. in a calendar month is a “common carrier” and includes a private right of action provision opening up social media companies to costly litigation expenses. Now in the second chamber, the bill is still under consideration in the Senate until the Ohio Legislature adjourns on December 30, 2022.

Texas

As litigation continues regarding HB 20 (passed in the 2021 session), content moderation and various other issues impacting the tech industry will likely be taken up again in 2023. During the 2022 interim, the Senate Committee on State Affairs held an interim study hearing on privacy and transparency, including biometric identifiers. The law has yet to take effect due to ongoing litigation, however, it is possible that lawmakers may attempt to pass legislation focusing on other legislative angles related to content moderation.

We anticipate that debates over content moderation will gain traction again soon after the next legislative cycle begins in 2023. As similar proposals have yet to advance in the U.S. Congress, states have taken a much more active role in legislating on these issues. A lack of harmonized provisions across the states risks creating a patchwork of laws and, consequently, an increased difficulty for online platforms to comply. This may deter new entrants to the market, stifle competition, and negatively impact consumers. States considering legislating on these nuanced issues must do so in a thoughtful and precise manner in order to protect the future of free speech and fair competition while also adhering to the best possible outcomes for the consumer.

Learn more about the state content moderation landscape here.